How do zip files generated by extraction tools used in digital forensics manage file timestamps?

When one looks at the zip file specification it does not contain defined fields for creation or access times. The only specification defined field for time is the modified timestamp.

|

| Zip file specification - Modified Timestamp |

Full File System (FFS) extractions from mobile devices are zip files but when processed by digital forensic tools the creation and access times can be seen along with the modified timestamp. These timestamps are known as the MAC times. If the only timestamp defined by the specification is the modified one, where are the other two?

|

| Processed FFS - Notice MAC times for keychain-2.db |

The creation and access times are kept in the extra field defined at the end of the specification. When these FFS extractions are opened with common zip managers like 7zip or WinRaR these MAC times are not seen by these managers because they have not been set to read and interpret the extra field.

|

| 7 zip view of keychain-2.db - Notice the empty created and accessed columns |

In order to validate the MAC times on these particular FFS extractions one can use the following python script: extract_timestamps.py

Script location: https://github.com/abrignoni/Misc-Scripts/

The script needs the path to the FFS extraction and the path internal to the zip for the file which you would like to see the associated MAC times.

Script usage:

|

| Terminal usage of script |

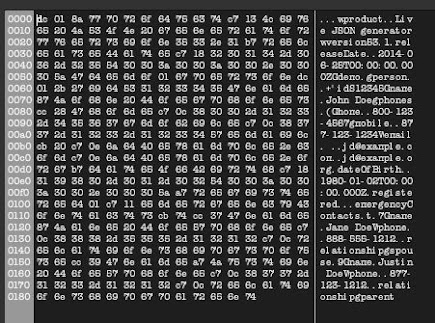

The output will be to the screen as follows:

|

| Script output to the screen |

Note that the MAC times at the end of the output all come from the extra field while the modified time at the start is taken from the specification field for that purpose.

Hope the previous has been informative and helpful. For questions or comments you can find me on all social media here: https://linqapp.com/abrignoni