Short version

The Badoo Free Chat and Dating app keeps user generated chats in the following SQLite database:

userdata/data/com.badoo.mobile/databases/ChatComDatabase

The following queries at

https://github.com/abrignoni/DFIR-SQL-Query-Repo can be used as templates to extract chats from the Badoo database:

- Messages

- Sender name, recipient name, chat message, create time, modified time, server status, payload.

- User data

- User ID, username, gender, age, user image url, photo url, max unanswered messages, sending multimedia enabled, user deleted.

By using the MAGNET App Simulator the messages can be easily seen in their native format. The simulator can be downloaded from here:

https://www.magnetforensics.com/resources/magnet-app-simulator/

Long version

The Badoo application is a chat and dating platform for Android and iOS. The app website claims a to have over 425,000,000 users and counting.

|

| Large install base |

The app seem to be fairly popular in the Google Play store with over 4 million reviews.

The following analysis came to be due to a request from a digital forensics examiner not being able to parse the app data using commercial mobile forensic tools. I procured consent from my colleague to use the data sets in the creation of the queries and accompanying blog post. With that being said I will obscure usernames and chat content in the data sets due to the fact that they are in French, which I do not speak, and I want to avoid publishing something without knowing what it says.

Analysis via SQL queries

The data is kept in the SQLite ChatComDatabase file located in the userdata/data/com.badoo.mobile/databases/ directory. Within the database there are 2 tables containing data of interest.

Conversation_info

This table contains the user IDs, gender, user names, age and profile photo URLs for all the users that chatted with the local Badoo app user. It is of note that the local app user information is not contained within this table. To identify the local user information I emulated the app with the Magnet App Simulator (more on that later) and was able to see the name and age of the local user.

|

| Username obscured |

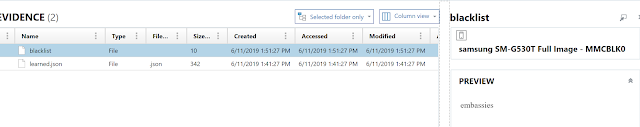

With that information on hand I processed the app directory with

Autopsy and did a text search for the user name which had a hit in the following path and filename:

userdata/data/com.badoo.mobile/files/c2V0dGluZ3M=

Note the base64 formatted filename. Using

Cyberchef it was easy to convert the base64 filename to ASCII as seen in the next image.

By looking at the contents of the settings file with Autopsy the following data can be obtained regarding the local user:

- Username

- Birth date

- Telephone numbers

- Weight & height

- Body type

- Workplace

- Sexual orientation

- Political orientation

It is of note that this user generated data surely would vary depending how much the user adds to their profile. Further testing would be required to confirm.

Regarding the user data of individuals that exchanged messages with the local user the

User data query can be used to get the following column values as seen in the next image.

Messages

This table contains the user IDs, timestamps, and chat messages. The chat messages are contained in a field labeled as payload that holds them in JSON format. It is really easy to extract them using SQLite's the json_extract function. For an example on how to use the json_extract function see the following post on Slack app messages parsing:

https://abrignoni.blogspot.com/2018/09/finding-slack-messages-in-android-and.html

Since the messages are referenced by their user IDs a join select of the messages and conversation_info tables had to be used to determine the sender and recipient names. To do this the select query had to take into account that the local user information was not found within the conversation_info table. This fact made it difficult to join the tables by user_ids since the most important user (the local user) did not have user name data to join. To overcome that obstacle I used two separate query conditions.

- Left join conversation info on sender_id = user_id

This condition gave me all sender user names to include null rows that had data but no corresponding user name (i.e. the rows for the messages sent by the local user.)

- Left join conversation info recipient_id = user_id

This condition gave me all recipient user names to include null rows that had data but no corresponding user name (i.e. the rows for the messages received by the local user.)

With these two queries on hand the idea was to join both selects by each row's unique ID. This would guarantee that there wouldn't be a one to multiple selection which would cause rows to be unnecessarily repeated. Then a simple order by created time would put all the messages in their proper order. I have also added a ifnull condition to the query so that every null username value will read 'local user' instead. The query and the result looks as follows:

|

| To see the full query see the previously provided link |

It is of note that I have added the payload data field with all the JSON content in it. This was important since some of the JSON content might not be a chat message but data regarding a shared image. When the chat_text field is null in the query results the examiner can simply go to the contents of the payload field to determine additional information like upload ID, expiration timestamp and the URL of the image itself. In the preceding image notice how the chat_text null field rows say "type":"permanent_image" in the payload field.

I plan to have these queries submitted to the

MAGNET Artifact Exchange Portal soon.

MAGNET App Simulator

|

| Main screen |

As stated previously I used the simulator to identify local user data by visualizing the app data through the app itself. The process is simple and straight forward.

The first thing to do is extract the app APK from the device.

|

| Load the APK |

Then load the app directory.

|

| Load app directory |

The simulator brings up an Android instance within VirtualBox, installs the APK, and injects the app data into this new virtualized app instance.

|

| Installing, importing, & injecting |

The results are incredible.

|

| Chats in viewed with the app itself, as intended |

Conclusion

This analysis was interesting to me for a couple of reasons. The first one underlines the importance of always doing a manual visual check of what apps are available in our extractions and of these how many are parsed by our tools. The difference requires manual attention since the most important piece of data might reside where is not to be found readily. The second reason is how simulation or virtualization of apps does not substitute manual database analysis and that both techniques can and should be used together to guide a deeper analysis of the application data. Without the combination of both techniques the rich repository of local user data might have gone unnoticed since it wasn't accessible in the databases nor in the virtualized screens.

To end I would like to thank not only those who contribute to the DFIR field with tools, scripts and data sets but also those who reach out to ask questions because they want to learn and grow. Truly there is no better way to learn that by trying to fill the gaps of things yet to be known.

As always I can be reached on Twitter

@AlexisBrignoni and email 4n6[at]abrignoni[dot]com.