Short version:

Microsoft Translator for Android (MT) keeps pertinent data in the following files and locations:

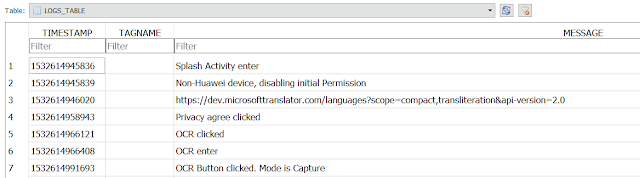

- Logs.db

- Location: /userdata/data/com.microsoft.translator/databases/

- Contents: Tracks all user app interactions with the correspoding activity timestamp.

- File type: SQLite database.

- MP3 files ('LAME' value in file header.)

- Location: /userdata/data/com.microsoft.translator/files

- Contents: Audio of the translated response by the app.

- Filename format: 5 sets of hexadecimal values separated by hashes. No extension.

8chars - 4chars - 4chars - 4chars - 12chars - com.microsoft.translator_preferences.xml

- Location: /userdata/data/com.microsoft.translator/shared_prefs

- Contents: XML file contains translations and related metadata items in JSON format. The file ties together translations with the corresponding OCR images. If images have GPS coordinates when taken, those are shown in this file. GPS metadata does not seem to remain with the image.

- userdata/media/0/Android/data/com.microsoft.translator/files/download

- Contains .jpg files taken when the translate from camera picture functionality is used

Long version:

MT provides language translation services using Microsoft technology on the Android platform.

As noted below it has amassed over 5 million downloads.

MT provides language translation services using Microsoft technology on the Android platform.

As noted below it has amassed over 5 million downloads.

|

| Don't overlook data. |

For this review I used the Android testing enviroment described here.

One of the reasons I try to share these simple Android DFIR app reviews is my interest in finding user generated data no matter where it might be. As stated previously in other blog entries, many examiners depend exclusively on the default parsing capabilities of their tools which can lead to data being overlooked. In order to go beyond the default parsing capabilities I do the following:

- Look at all the databases recovered by the tool. Verify if tool decodes them. For the ones that are not decoded do a manual check for relevance.

- Do a manual check of all the userdata folder contents. Not always the most fun and can be time consuming but with enough time and experience this manual check goes faster. As time goes by the examiner can determine the odd not expected folder easily, they kinda pop out of the list as an item of interest.

- Make sure to run relevant search terms against the image and/or data set directly. Many tools will index file names, the content of parsed artifacts and nothing else. If you don't run the terms against the whole data set those files with the search terms in them will be missed. Better still...

- Use more than one tool for analysis. This is a must. Heather Mahalik (@HeatherMahalik) makes the point more eloquently here.

Analysis

With all of this in mind I installed the MT app and created some test data. The interface is simple, clean and self explanatory. This makes sense since a language app can't assume the language of the user, key functionality is accessed by icons.

|

| Main app functions. |

For testing I generated data using the microphone, keyboard and camera. The far right option is a group translation functionality that was not used for this review.

For the camera function the app has the user line up the text for translation. Optical character recognition (OCR) identifies the text and translates it to the desired language. It is of note that the images take with the app do not appear in the phone gallery and can only be accessed after the fact by going to the history function on the bottom left corner of the screen as seen above.

|

| OCR is not perfect. |

For the microphone the user speaks and the app responds with a translation both in writing and audibly.

|

| Microphone. |

After translating some phrases and using the OCR functionality a physical image of the phone was extracted. I used Magnet Forensics Acquire (no cost!) for the extraction and Autopsy (also no cost!) for the analysis.

File System and Artifacts

The MT application folder is located at /userdata/data/com.microsoft.translator/ and contains the following directory structure:

|

| Application directory structure. |

In the 'databases' folder there is a SQLite database named Logs.db which records all user interactions with the application with a timestamp for each. It even records when the user clicked the privacy agreement when the app was first run. Timestamps are in Unix epoch. Details on how to convert them to human readable time can be found here.

|

| Activity logs. |

In the 'shared_prefs' folder a file named com.microsoft.translator_preferences.xml contains the main translation artifacts from the app. Below is a small section of the contents of the file. The XML contains JSON formatted content.

|

| JSON-ception. |

To review the file contents I processed the file with a simple JSON to HTML/EXCEL python parser I put together. The script can be found here.

When the OCR functionality is used the section in the file that records that activity start with the location of the captured image within the device and the GPS coordinates as available through the location settings on the device.

|

| Image name, location and GPS coordinates. |

It is of note the images are kept in emulated storage in a directory associated with the app. The image file itself does not keep the GPS metadata. On my test device these images are not accessible via the Gallery app. The image was recovered from the location stated in imagePath and looks like so:

|

| Captured image via the app. |

Next is a portion of the translation as recorded in the XML/JSON file.

|

| Translation, confidence level, text, etc... |

The fields are mostly self-explanatory. Text is the OCR identified words and translation is the response given back by the app. A timestamp for the translation is found at the end of each translated section within the JSON formatted content.

|

| Timestamp. |

For translations based on spoken language the JSON file looks as so:

|

| Translations and timestamps. |

The Id section is the name of the MP3 file that contains the translation using the app generated voice. Notice the timestamps. These translations can be 'pinned' via the app. When a file is pinned the pinnedTimeStamp appears. These audio files are located at: /userdata/data/com.microsoft.translator/files/

Conclusion

In a good digital forensic exam the tool interfaces and the automatic generated reports are the beginning of the investigation and not the end. Geolocation artifacts and timestamped activity can be found where least expected.

As always I can be reached on twitter @alexisbrignoni and email 4n6[at]abrignoni[dot]com.