The iOS Nike Run app keeps geolocation data in the following location and files:

- /private/var/mobile/Containers/Data/Application/*UUID*/Documents/activityStore.db

- SQLite database

- Contains metrics for each run to include calories, distance, elevation, latitude and longitude.

SELECT lat.sourceid, datetime(lat.startDateInUtcSeconds, 'unixepoch', 'localtime') as day, lat.startDateInUtcSeconds, lat.value as latitude, long.value as long

FROM metrics as lat left join metrics as long on lat.startDateInUtcSeconds = long.startDateInUtcSeconds

WHERE lat.type = 'latitude' and long.type = 'longitude' and lat.sourceID = integer_value_of_interest

ORDER BY lat.startDateInUtcSeconds

- Latitude and longitude values for a specific point in time reside in different rows of the same table. This makes exporting the data to a mapping application difficult since these programs expect both values to be in one row. In order to export both values in one row a SQL self join query like the one above should be used.

- By eliminating lat.sourceID from the query all GPS data from all runs are returned. Importing all that latitude/longitude data to a mapping application, like Google Earth, will either hang it or map overlapping tracks if the multiple runs covered the same path during different days.

- By equating lat.sourceID to a sourceID of interest a single run (set of related GPS coordinates) can be selected and exported for mapping. In the query above the term integer_value_of_interest is a placeholder term I which should be substituted. The script below selects the appropriate integer for all runs that have geolocation data automatically.

Long version:

One of the apps I use to track my #DFIRFit runs is the Nike + Running app. As I train for the Dopey Challenge races, the app tracks my all progress. It tracks pace, elevation, time, weather and even route per GPS coordinates. These last set of data points are the reason for this blog post.

|

| Nike+ App in the App Store |

Testing Platform

For the analysis I am using the following device:

- iPhone Jailbreaking - Elcomsoft blog

- iOS imaging on the cheap - Sarah Edwards

ssh root@iphoneip -p 22 'tar -cf - /private/var' > data.tarAfter obtaining the logical image in a tar file the contents were extracted and processed with Autopsy.

Analysis

Since the folders where apps store user data use a UUID for a name, I did the following to identify which of these were the ones in use by the Nike+ app.

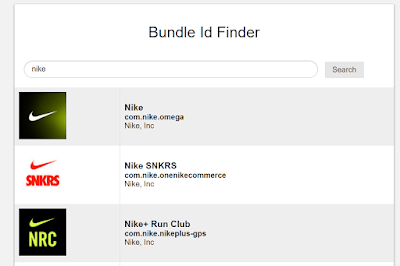

1) Identify the bundle id.

Search the bundle id lookup here.

|

| Bundle Id: com.nike.nikeplus-gps |

2) Go to the following path and file:

/private/var/mobile/Library/FrontBoard/applicationState.db

3) Open the applicationState.db SQLite database. Look for the bundle id in the

'application_identifier_tab' table. Take note of the id.

|

| The id is 103 |

4) Go to the 'kvs' table and export the blobs for id 103 or view their contents in the included binary viewer in the DB Browser for SQLite software for the target UUID numbers. If blob is exported use a bplist viewer like Sanderson Forensics Binary Plist Viewer to get the following:

|

| UUID named directories that hold the Nike+ data. |

Now that we have the UUIDs that relate to the app we can analyze the contents of those directories.

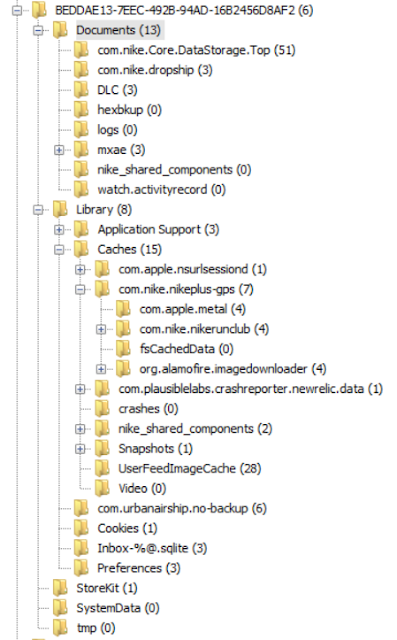

The following image is an overview of the directory structure in use by the Nike+ app.

|

| Nike+ app directories. |

/private/var/mobile/Containers/Data/Application/BEDDAE13-7EEC-492B-94AD-16B2456D8AF2/Documents/activityStore.dbThe activityStore.db SQLite database contains information beyond latitude and longitude. It also tracks pace, descent, ascent, distance, elevation, calories, nike fuel, speed, and horizontal_accuracy. These can be found in the 'metrics' table within the database. The following image shows the fields names defined in this table.

|

| Metrics table. |

The following query selects only rows where the field type contains longitude or latitude as a metric of interest. It also generates a field in the report that converts the UTC time to local time.

SELECT *, datetime(metrics.startDateInUtcSeconds, 'unixepoch', 'localtime') as loctime

FROM metrics

WHERE (metrics.type = 'latitude' or metrics.type = 'longitude') and metrics.sourceID = 4 ORDER BY metrics.startDateInUtcSeconds

|

| Exporting is not that straight forward. |

SELECT lat.sourceid, datetime(lat.startDateInUtcSeconds, 'unixepoch', 'localtime') as day, lat.startDateInUtcSeconds, lat.value as latitude, long.value as longThe query does various things.

FROM metrics as lat left join metrics as long on lat.startDateInUtcSeconds = long.startDateInUtcSeconds

WHERE lat.type = 'latitude' and long.type = 'longitude' and lat.sourceID = integer_value_of_interest

ORDER BY lat.startDateInUtcSeconds

- It creates 2 temporary tables which are copies of the metrics table. This happens at the FROM instruction. Each temporary table is named lat and long respectively.

- A left join is used to combine both tables by the startDateinUtcSeconds field. This is due to the fact that for a recorded location in time there will always be a set of corresponding latitude and longitude values.

- From this joint table the sourceid, startDateinUtcSeconds, startDateinUtcSeconds converted to local time, and the values for latitude and longitude are selected. The sourceid is important because all metrics tracked in a single run will share the same sourceid.

- The selection is constrained to only latitude and longitude values in the WHERE section. Optionally one can constrain further by selecting only a single run for the results. This is accomplished by 'lat.sourceID = integer_value_of_interest' where the integer will be a single run.

It is of note that if the lat.sourceID constraint is removed from the WHERE clause the returned query will contain all the selected fields for all runs. Exporting so many entries will hang most mapping applications. It is better to select a few runs of interest based on case pertinent points in time and export them by individual run or to script the extraction of these from the database. These data sets will be much smaller and easily ingested by the mapping applications. More on scripting at the end.

In the following example I selected all longitude and latitude data where lat.sourceID equaled 4. Based on the date I knew it had to reflect a half marathon I had ran at Disney in Orlando some years ago. I exported the result as a CSV file and used Google Earth for visualization.

Here is how the resulting query looked:

|

| Latitude and longitude share the same row now. SourceID = 4. |

Here is how Google Earth mapped the coordinates:

|

| Yellow pin on the right denotes starting point. |

If we zoom in we can see Epcot Center.

|

| Epcot Center |

We can also see Cinderella's Castle at Magic Kingdom.

|

| Castle right in the middle. |

By changing the integer selected in the lat.sourceID field I could target any individual run and export those coordinates only.

This analysis tell us NOTHING about what device was used to record this data within the app.

As a matter of fact none of the runs in my test device were made with it. The Disney run above happened in 2012 and I don't even have the phone I used back then. We have to be careful and not jump to conclusions. Just because an app registers geolocation data does not mean the device in which the data was found was the one used to generate it. App synchronization requires the examiner to be really careful before making causal determinations. As always careful testing and validation is necessary.

BIG BIG CAVEAT #2

Super important. In order to not miss evidence of possible deleted records do use a forensic SQLite tool to explore WAL records before any manipulation of the database is attempted with non-forensic tools.

The following script will automate the extraction of geolocation data by creating a CSV file per run.

Execute the script in the same directory as the extracted database. CSV files will be generated automatically from all available runs.

https://github.com/abrignoni/NikeRunApp-GeoparserCSV file names generated by the time the run started.

|

| Exported and converted runs from SQLite to CSV |

Conclusions

There are additional artifacts and larger set of metrics recorded in the referenced database for future exploration. Self join queries are awesome. Automating data parsing/conversion is even better.

As always I can be reached on twitter @alexisbrignoni and email 4n6[at]abrignoni[dot]com.